Post automatically translated

The Uncomfortable Awakening

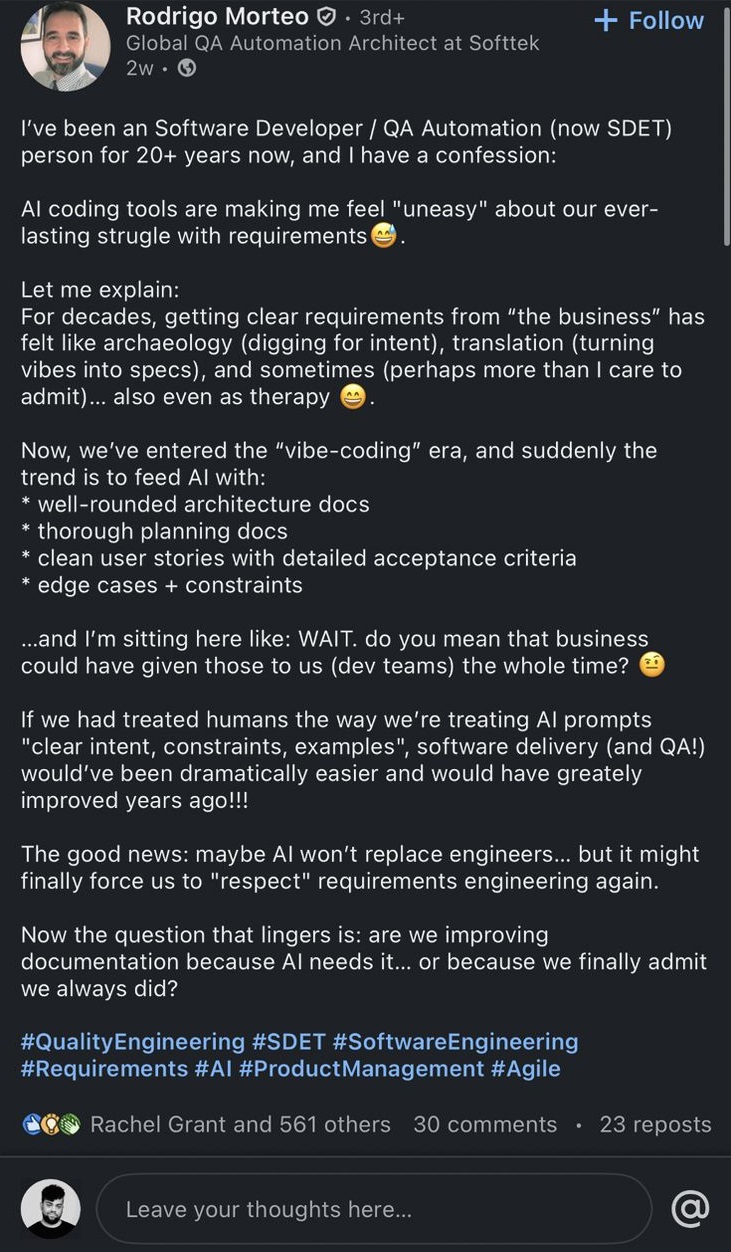

Recently I came across a LinkedIn post by Rodrigo Morteo that hit a nerve. After 20+ years in software development and QA automation, he confesses that AI tools are making him feel uncomfortable about our daily struggle with requirements.

about software requirements and business details

His point caught my attention. We’ve entered the “vibe-coding” era, where suddenly everyone is feeding AI with well-structured architecture documents, detailed planning documents, clean user stories with detailed acceptance criteria, and edge cases with their constraints also thoroughly documented.

The goal of this text isn’t to refute my friend, but rather to add my thoughts to his post, with more details below.

The Requirements Era

As a Dev with over a decade of experience in banking, retail, and e-gaming sectors, I’ve lived through the archaeology of requirements gathering that Rodrigo describes. The endless meetings trying to decode almost always vague requests from business. The “we’ll know it when we see it, or let’s focus on the quick win” approach (quick wins seriously get on my nerves, honestly) to acceptance criteria. The refinements that don’t always refine…

AI doesn’t just receive better documentation most of the time we provide something better than what we give to developers. Almost always we’re creating documentation for the first time, specifically to make prompts for AI tools. Business hasn’t suddenly become more articulate, and people aren’t more willing to do a great job describing requirements it’s a kind of reverse engineering of clarity from ambiguity to feed the machine. (laziness?) So much so that this very text was reviewed by some LLM to deliver slightly better grammar than what I’ve been using daily.

Just One Point of View

Everyone has access to AI now. ChatGPT, GitHub Copilot, Claude… the list keeps growing pretty fast, democratization happened quickly and code generation at an unprecedented scale took place. Documentation hasn’t been left behind it’s growing exponentially. User stories are more detailed. Architecture diagrams are more polished. People who had never seen a Mermaid diagram can now deliver any type of diagram from a vague prompt.

But here’s the critical question that, from my point of view: Who’s actually supervising or reading this stuff?

When developers wrote code from vague requirements, at least there was a person in the process interpreting, questioning, jumping on calls. When QA tested features, they applied critical thinking to ambiguous specifications. The friction in the process, as frustrating as it was, served as a quality gate. And honestly, that friction is important.

Here’s an interesting connection between his post and a post by Simon Smart, which in my view was where ambiguities were resolved, when we had access to devs and their maps: When your code is a maze, smart developers make maps A read that, connected to this post, shows how enriching the dev-tester interaction is.

Now we’re in a situation where:

- Junior developers (or not) prompt AI to generate entire features

- AI creates tests for AI-generated code (not to mention context window limitations)

- AI reviews AI code

- Documentation is enhanced by AI without domain expertise validation

- Requirements are clarified by AI interpreters instead of business stakeholders or team members

Productivity has increased dramatically, but is quality and actual delivery really increasing?

From the Testing and Development Side

From a development and quality perspective, this creates a perfect storm:

Previous process:

Vague requirements or POC idea delivered by stakeholders

→ Developers interpret

→ QA validates wrong interpretations

→ Iterative refinement

With AI:

Vague initial prompts to AI agents generate detailed-looking requirements

→ Refinement is done by AI, covering gaps without requirements validation

→ Tests and code are generated based on requirements using AI or not

→ Refinement goes back in rounds with AI before reaching stakeholder

The intermediate steps the critical thinking, the domain knowledge, the “does this actually make sense for the business?” checkpoint are disappearing. Deploys are faster, features have increased, and yes, I do believe AI is becoming increasingly capable of generating code. But still, we are the human agents of AI agents.

I’ve seen AI-generated test automation suites that are technically correct but test the wrong things. The same happens with code: dozens of logic duplications, deprecated functions forgotten to maintain an old version of the POC, but that were delivered quickly and work performantly yet again, they increase the maze without creating the maps mentioned by Simon Smart. I’ve reviewed acceptance criteria that look comprehensive but miss the actual business logic. The documentation exists, but it wasn’t validated. It wasn’t questioned.

Are We Improving or Just Performing?

Are we creating the illusion of documentation without the substance?

The real value of requirements engineering goes beyond the artifacts — it’s the conversations, the negotiations, the shared understanding that emerged from the process. When business analysts, developers, and QA sat together and hammered out what a feature really meant, that’s where clarity lived. (Please don’t take this as a call for in-office work)

Now we’re optimizing for AI consumption, not for human understanding. We’re creating prompts, not specifications. We’re losing the shared mental models and perhaps the clarity of what we want to achieve.

Maybe part of this is also the cultural shift I’ve been living through recently. I’m from the country of “jeitinho” (the Brazilian way of finding creative workarounds), of human contact that’s present in all interactions. But that’s a post for another time.

The Answer

Simple I don’t have it. :D Those who know me know how much I enjoy raising questions without answering them.

The Bottom Line

AI coding tools make something clear that we’ve always known: good requirements were always possible, we just didn’t prioritize them. But the solution isn’t to generate mountains of documentation for AI consumption while leaving human understanding behind. Yes, it’s hard to fight against the wave of serotonin that comes from seeing something created so quickly and apparently correctly.

The dangerous reality is that we’re scaling code generation faster than we’re scaling oversight and understanding. Everyone has access to powerful AI tools, but not everyone has the experience to know when those tools are confidently wrong.

As someone who has spent years in software engineering and quality, I know that:

- More documentation ≠ better quality

- More tests ≠ better coverage

- More code ≠ more value

- More speed ≠ innovation

Now What

I hope AI forces us to respect requirements engineering again, but creating genuine understanding, not just feeding the machine is a challenge that will take some work to overcome.

I’ll leave you with “how do we ensure people remain at the center of the quality process?” or going even further, “how do we keep people at the center of all processes?” since everything, whatever it may be, has a customer who will use or benefit from what’s being developed.

Anyway, just another brain dump from my head.